OpenAI’s AI chatbot, ChatGPT, has sparked a robust conversation in the world of AI, particularly around the potential privacy risks associated with the AI’s data retention policies. Critics are concerned about the inadvertent sharing of sensitive information, which could potentially be used by OpenAI to train its model.

A glaring example of this is the Samsung incident. Employees of the tech giant accidentally leaked confidential information via ChatGPT on three separate occasions. This sensitive information included internal business practices, proprietary code under troubleshooting, and the minutes from a team meeting. Such instances illuminate the privacy risks involved when organizations use AI chatbots like ChatGPT without a thorough understanding of the privacy implications.

Why Data Security Matters

The impact of such oversharing can be extensive. For instance, when employees use ChatGPT to troubleshoot code, this code is stored on OpenAI’s servers. This data storage can lead to significant breaches that could impact companies that are troubleshooting unreleased products and programs. Potential fallout could include leaked information about unreleased business plans, future releases, and prototypes, resulting in substantial revenue losses.

Data breaches are an ever-looming threat in our increasingly digital world, and the consequences can be severe. For individuals, a data breach could lead to identity theft, financial loss, and compromise of personal safety. Personal data leaked in a breach could be used to commit fraud, lead to unauthorized access of online accounts, or even facilitate targeted harassment or stalking.

The stakes are even higher for businesses. Confidential data leaks can result in competitive disadvantage, loss of customer trust, and significant financial impact. For example, if proprietary code or unreleased product details are leaked, it could give competitors an unfair advantage and potentially result in substantial revenue loss. Furthermore, breaches involving customer data could erode trust and harm the company’s reputation, leading to customer attrition. In some cases, data breaches could also result in regulatory fines and legal action, adding to the financial burden and potentially causing long-term damage to the company’s brand.

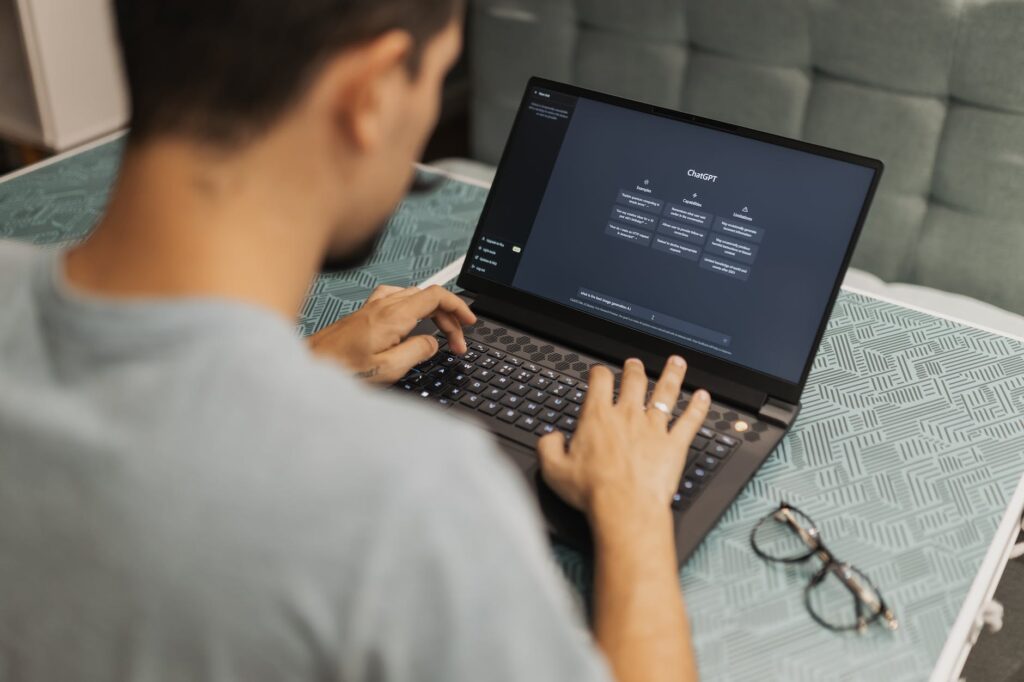

Despite warnings and guidelines, oversharing on ChatGPT is a common issue. According to research by the data security company Cyberhaven, as many as 6.5% of employees have pasted company data into ChatGPT, and 3.1% have copied and pasted sensitive data into the program. This was analyzed across 1.6 million workers that use its product.

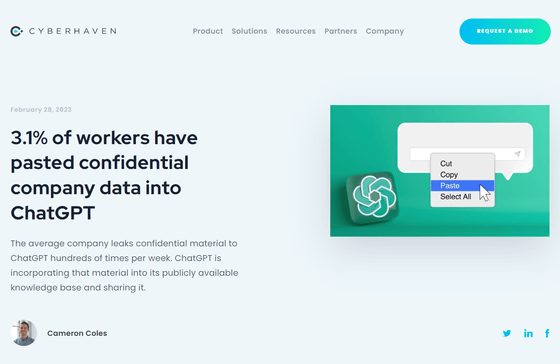

Here’s What ChatGPT Says About Security

ChatGPT’s FAQ warns users not to share any sensitive information in their conversations and states that specific prompts cannot be deleted from their conversation histories. They write, “Please don’t share any sensitive information in your conversations.” Despite these warnings, not all users heed this advice, leading to potential privacy breaches. They are also openly using user-data to improve their models, meaning your private data could be used to answer other peoples’ questions.

Adding to the problem, traditional cybersecurity solutions cannot prevent users from pasting text into the ChatGPT browser. This leaves organizations unable to assess the scope of the problem fully and underlines the need for user education and more robust safety measures in AI interfaces.

In the context of AI chatbots like ChatGPT, these risks underscore the importance of using such tools responsibly and understanding the data privacy implications. It’s not just about the data being collected, but also how it’s used, stored, and potentially exposed.

Given these risks, some companies have taken decisive action. Major companies, including Walmart, Amazon, and major ChatGPT-supporter Microsoft, have warned employees not to share confidential information with ChatGPT. Other firms like J.P. Morgan Chase and Verizon have gone a step further, outright banning the use of ChatGPT.

Safer Options Out There

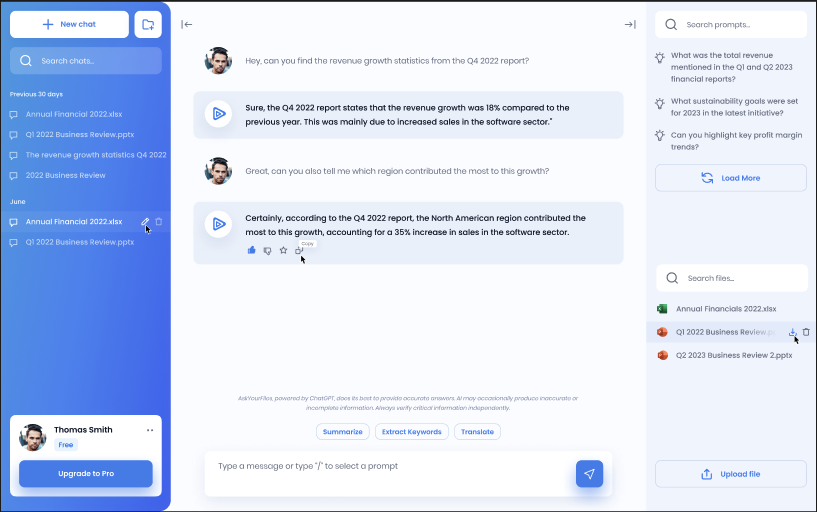

While the ChatGPT API is designed with safety measures and OpenAI has clarified that it does not use data sent via the API to improve its models, concerns persist around the data entered directly into the chat interface. For those who are not developers and are looking for a user-friendly option, platforms like AskYourFiles offer a safer alternative.

AskYourFiles stores user data in vectorized databases, which ensures that the data used to train your AI instances or bots remains exclusive to you, not shared with other users. This not only provides a more personalized AI experience but also significantly reduces the risk of data exposure. With AskYourFiles, your data is never shared to improve the core GPT model, offering an extra layer of privacy protection. By staying informed and choosing platforms that prioritize data privacy, users can benefit from the power of AI technologies while minimizing potential risks.